Apple demonstrated a brand new search expertise with Apple Intelligence named Apple Visible Intelligence. It seems and appears like Google Lens however it makes use of the native iPhone digital camera and is constructed into Apple Intelligence instantly.

Plus, it appears to make use of third-party search suppliers, like Google, OpenAI’s ChatGPT and Yelp for its search outcomes – relying on the kind of question.

What it seems like. Listed here are some screenshots I grabbed from the Apple occasion from yesterday, if you wish to watch it, it begins at concerning the 57-minute mark in this video:

Trying to purchase a motorbike you noticed in your stroll; it says “Looking with Google…” after you snap a photograph of it:

Though, the instance supplied of the search outcomes look considerably “doctored”:

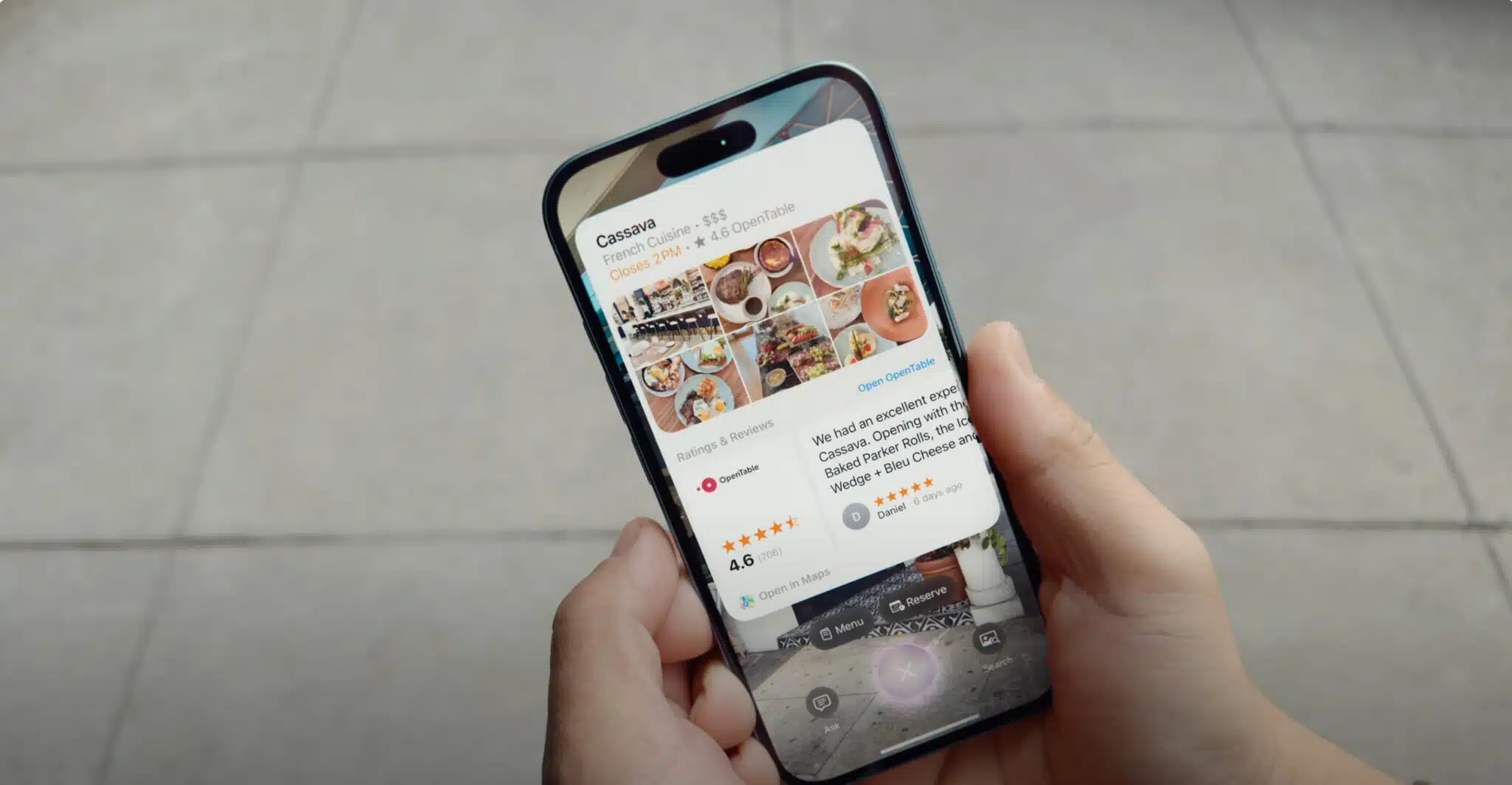

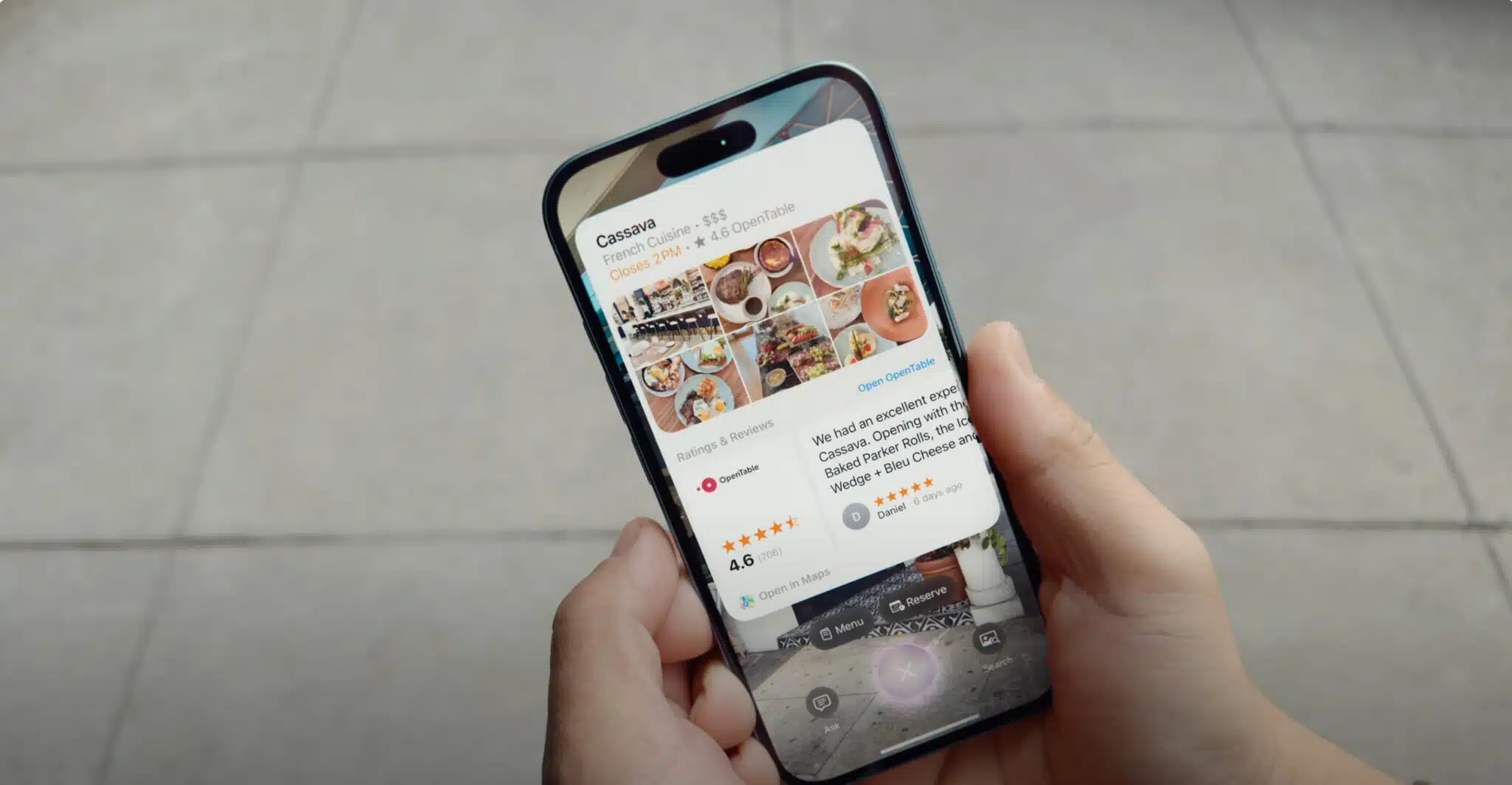

Right here is an instance of an area search end result when somebody desires extra particulars on a restaurant they got here throughout whereas strolling. This appears to drag up the native search leads to Apple Maps, which I consider is powered by Yelp and OpenTable.

Here’s a shut up exhibiting OpenTable choices in Apple Maps:

Then right here is an instance of taking a photograph of a homework project, the place it makes use of OpenAI’s ChatGPT for assist:

Why we care. Apple appears to be utilizing AI as a software reasonably than a basis for its units, the place it integrates with Google, OpenAI and different search suppliers. There’s clearly underlining AI and machine studying that’s happening on the Apple machine, however the outcomes appear to be coming from third-parties.

An early beta assessment from the Washington Post suggests it has an extended option to go. Particularly it has points with with hallucinations, marking spam emails as precedence, and different issues.