Google’s up to date site reputation abuse policy makes an attempt to deal with a rising difficulty in search: giant, authoritative websites exploiting their area power to rank for content material they don’t personal or create.

Whereas the coverage is a step in the proper path, it doesn’t deal with the underlying systemic issues with Google’s algorithm that enable this abuse to thrive.

Understanding Google’s web site popularity abuse coverage

Google’s web site popularity abuse coverage was introduced in March 2024, however its announcement was overshadowed by a major core update that very same month.

Because of this, what ought to have been a pivotal second for addressing search manipulation was relegated to a footnote.

At its core, the coverage targets giant, authoritative web sites that leverage their area power to rank for content material they didn’t create.

It’s designed to stop these entities from appearing as “hosts” for third-party content material merely to take advantage of search rankings.

A transparent instance can be a high-authority enterprise web site internet hosting a “coupons” part populated fully with third-party knowledge.

Lately, Google expanded the coverage’s scope to deal with much more eventualities.

Within the updated guidelines, Google highlights its evaluate of circumstances involving “various levels of first-party involvement,” citing examples resembling:

- Partnerships by white-label companies.

- Licensing agreements.

- Partial possession preparations.

- Different complicated enterprise fashions.

This makes it clear that Google isn’t simply focusing on programmatic third-party content material abuse.

The coverage now goals to curb intensive partnerships between authoritative websites and third-party content material creators.

A few of these typically contain deeply built-in collaboration, the place exterior entities produce content material explicitly to leverage the internet hosting web site’s area power for increased rankings.

Dig deeper: Hosting third-party content: What Google says vs. the reality

Parasite search engine optimisation is a much bigger difficulty than ever

These partnerships have turn out to be a big problem for Google to handle.

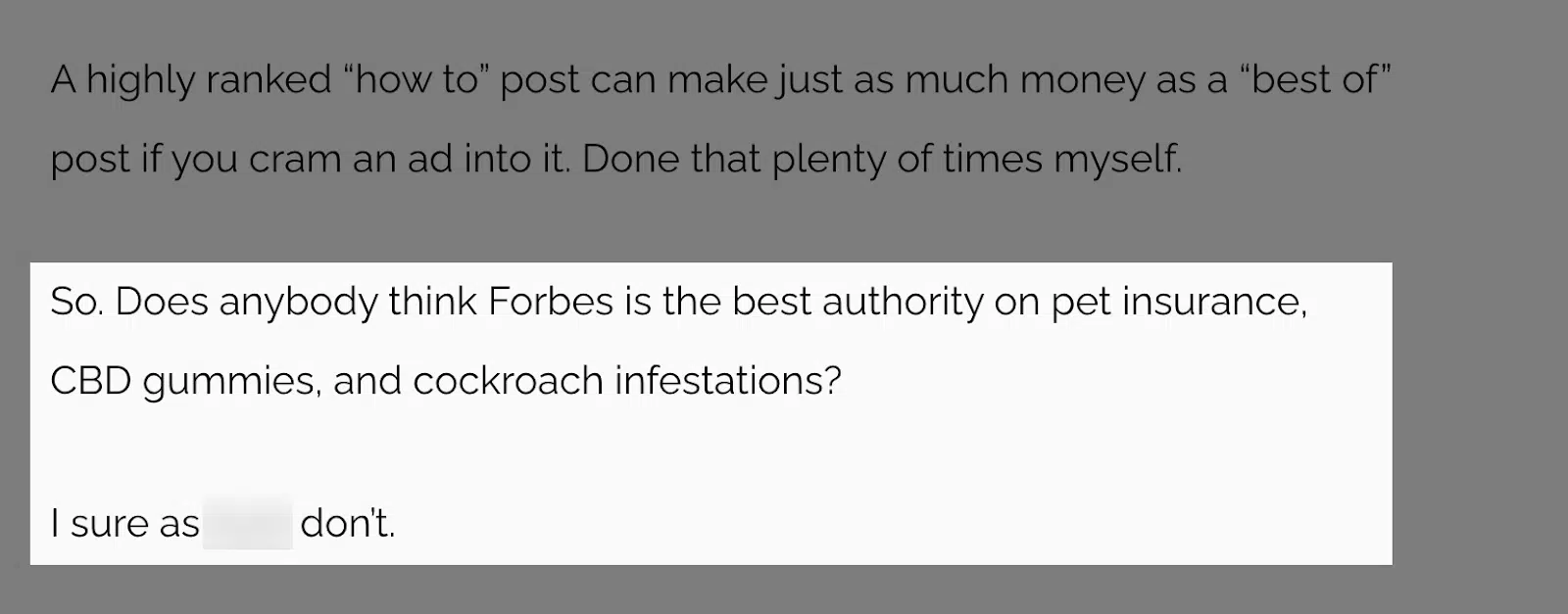

One of the crucial impactful search engine optimisation investigations this yr was Lars Lofgren’s article, “Forbes Marketplace: The Parasite SEO Company Trying to Devour Its Host.”

The piece dives into Forbes Advisor’s parasite search engine optimisation program, developed in collaboration with Market.co, and particulars the substantial site visitors and income generated by the partnership.

Forbes Advisor alone was estimated to be making roughly $236 million yearly from this technique, based on Lofgren.

As Lofgren places it:

This highlights the systemic downside with Google search.

Forbes Advisor is simply one of many examples of parasite search engine optimisation packages that Lofgren investigates. If you wish to go deeper, read his articles on other sites running similar programs.

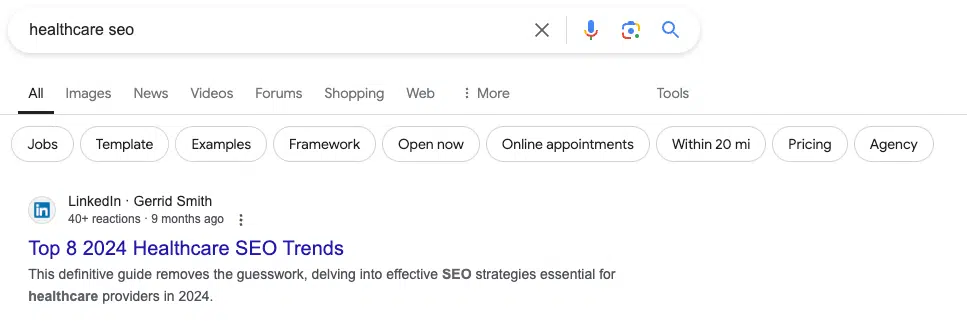

LinkedIn is one other prime instance. Over the previous few years, customers have more and more leveraged LinkedIn’s UGC platform to capitalize on its highly effective area authority, pushing their content material to the highest of search outcomes.

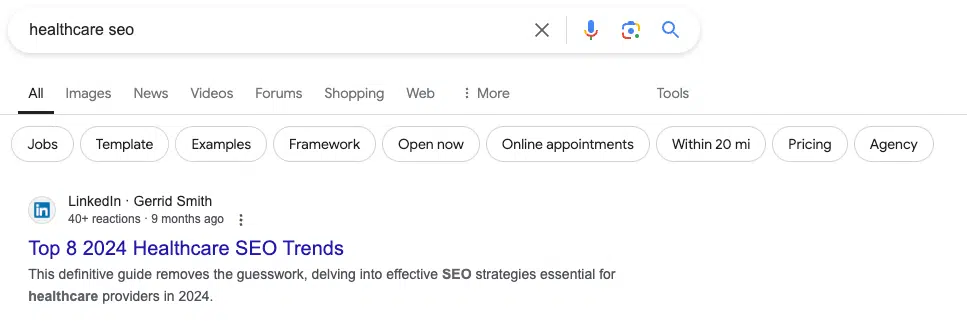

For example, as of this writing, the top-ranking outcome for “healthcare search engine optimisation” shouldn’t be from a specialised professional web site however a LinkedIn Pulse article.

Should you dig of their question knowledge, you’ll see a variety of queries from enterprise, grownup matters, private loans and extra.

Clearly, LinkedIn isn’t the perfect supply for all of these items, proper?

The rise of packages designed to govern search outcomes has doubtless pushed Google to introduce the location popularity abuse coverage.

Get the e-newsletter search entrepreneurs depend on.

The larger downside

This brings me to why the coverage isn’t sufficient. The core difficulty is that these websites ought to by no means rank within the first place.

Google’s algorithm merely isn’t robust sufficient to stop this abuse constantly.

As an alternative, the coverage acts as a fallback – one thing Google can use to deal with egregious circumstances after they’ve already brought on injury.

This reactive strategy turns right into a unending sport of whack-a-mole that’s almost unattainable to win.

Worse but, Google can’t presumably catch each occasion of this occurring, particularly on a smaller scale.

Repeatedly, I’ve seen giant websites rank for matters outdoors their core enterprise – just because they’re, properly, giant websites.

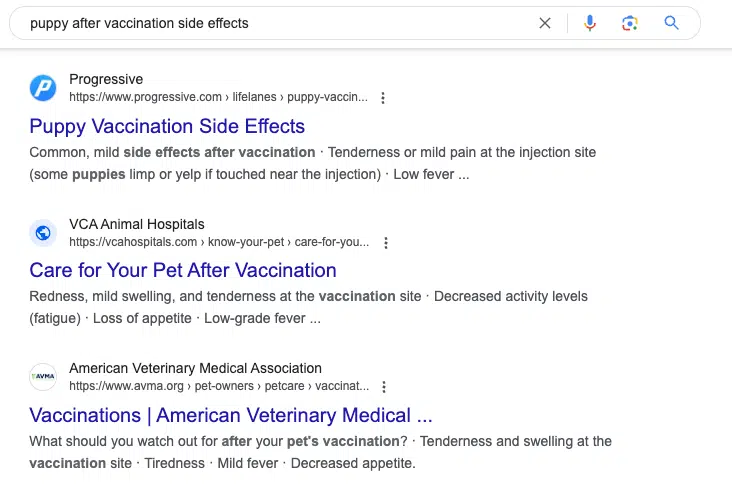

Right here’s an instance for instance my level. Progressive has a weblog known as Lifelines, which primarily covers matters associated to its core enterprise – insurance coverage, driving ideas, site visitors legal guidelines, and so on.

Nevertheless, one in all their weblog posts ranks in Place 4 for the search question “pet after vaccination unwanted effects,” above precise consultants just like the American Veterinary Medical Affiliation.

The lead to Place 1? It’s Rover.com, a expertise firm that helps pet house owners discover sitters – nonetheless not a medical professional, but leveraging its robust area.

I’m not suggesting that Progressive is participating in something nefarious right here. That is doubtless only a one-time, off-topic publish.

Nevertheless, the bigger difficulty is that Progressive might simply flip its Lifelines weblog right into a parasite search engine optimisation program if it needed to.

With minimal effort, it’s rating for a medical question – an space the place E-E-A-T is supposed to make competitors more durable.

The one approach to cease this proper now’s for Google to identify it and implement the location popularity abuse coverage, however that would take years.

At greatest, the coverage serves as a short-term repair and a warning to different websites trying abuse.

Nevertheless, it will probably’t deal with the broader downside of enormous, authoritative websites constantly outperforming true consultants.

What’s occurring with Google’s algorithms?

The positioning popularity abuse coverage is a short lived band-aid for a a lot bigger systemic difficulty plaguing Google.

Algorithmically, Google ought to be higher outfitted to rank true consultants in a given discipline and filter out websites that aren’t topical authorities.

One of many greatest theories is the elevated weight Google locations on model authority.

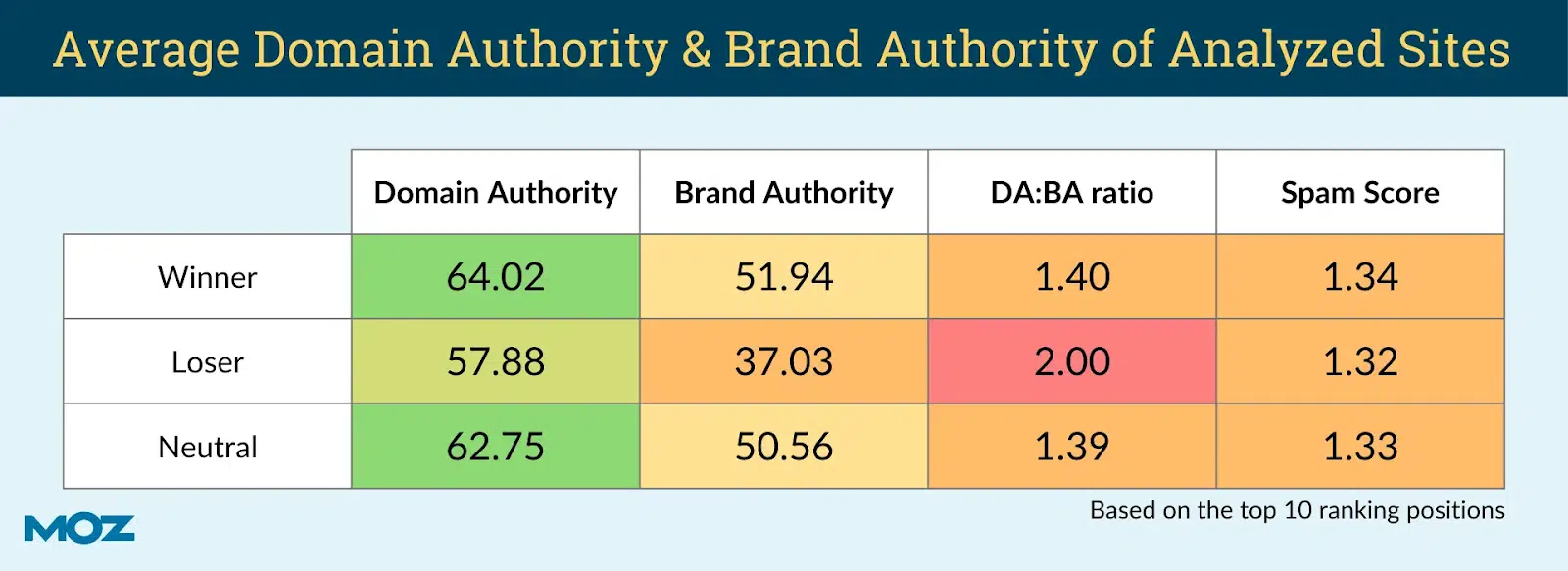

The winners of the helpful content update had been extra more likely to have stronger “model authority” than “area authority,” based on a recent Moz study.

Primarily, the extra model searches a web site receives, the extra doubtless it’s to emerge as a winner in latest updates.

This is smart, as Google goals to rank main manufacturers (e.g., “Nike” for “sneakers”) for his or her respective queries.

Nevertheless, huge manufacturers like Forbes, CNN, Wall Road Journal and Progressive additionally obtain a whole lot of model search.

If Google locations an excessive amount of weight on this sign, it creates alternatives for big websites to both deliberately exploit or unintentionally profit from the ability of their area or model search.

This technique doesn’t reward true experience in a selected space.

Proper now, the location popularity abuse coverage is the one instrument Google has to deal with these points when their algorithm fails.

Whereas there’s no straightforward repair, it appears logical to focus extra on the topical authority side of their algorithm transferring ahead.

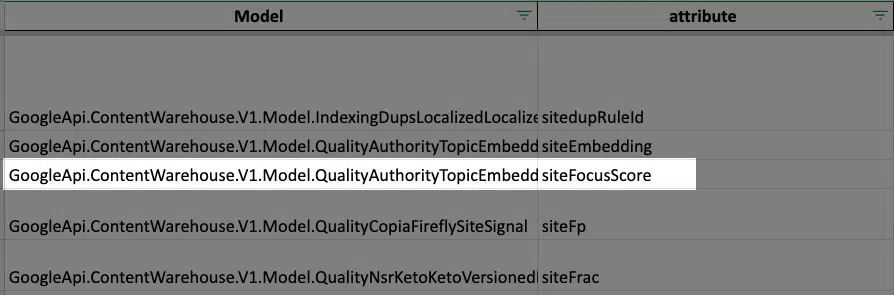

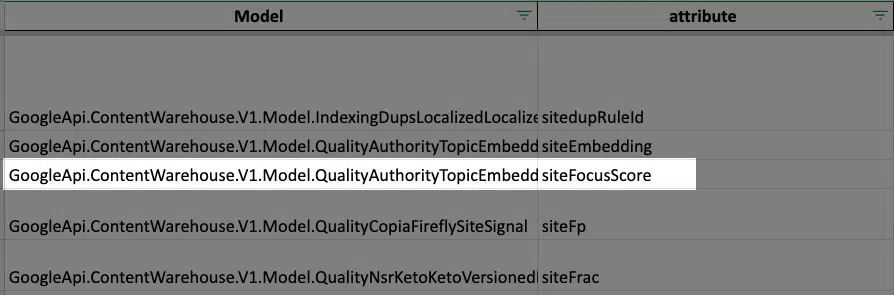

After we have a look at the Google Search API leaks, we will see that Google might use completely different variables to find out a web site’s topical experience.

For example, the “siteEmbedding” variable implies they’ll categorize your complete web site.

One which stands out to me is the “siteFocusScore” variable.

It’s a “quantity denoting how a lot a web site is targeted on one subject,” based on the leaks.

If websites start to dilute their focus an excessive amount of, might this be a set off indicating one thing bigger is at play?

Transferring ahead

I don’t assume the location popularity abuse coverage is a nasty factor.

On the very least, it serves as a much-needed warning to the net, with the specter of important penalties doubtlessly deterring essentially the most egregious abuses.

Nevertheless, within the brief time period, it appears like Google is admitting that there’s no programmatic resolution to the issue.

Because the difficulty can’t be detected algorithmically, it wants a approach to threaten motion when obligatory.

That stated, I’m optimistic that Google will determine this out in the long term and that search high quality will enhance within the years to return.

Contributing authors are invited to create content material for Search Engine Land and are chosen for his or her experience and contribution to the search neighborhood. Our contributors work underneath the oversight of the editorial staff and contributions are checked for high quality and relevance to our readers. The opinions they specific are their very own.