Crawling websites is a dynamic course of intricately tied to their content’s quality and relevance. Content material, also known as the king, holds important sway over crawling actions. Google’s search outcomes emphasize the significance of high-quality, user-centric content in driving elevated crawling demand. In your SEO plan & strategy, you could totally analyze content material earlier than Google crawls. On this weblog, we’ll discover critical SEO facets important for enhancing website visibility. We may even talk about the idea of crawl budget, which defines the allocation of assets for promptly indexing pages. Furthermore, emphasis can be positioned on the pivotal position of high-quality content in attracting each customers and search engine crawlers.

Understanding person search demand helps create nice content material that’s aligned with person intent, thus rising relevance and search rating. Prioritizing user experience, together with components like site speed and mobile-friendliness, enhances each person satisfaction & search engine efficiency. By comprehensively addressing these elements, the weblog goals to supply readers with insights to anticipate crawling challenges and guarantee sustained web site success.

What’s crawling?

Crawling is the method wherein web crawlers or spiders persistently uncover content material on a web site. It contains all the things that’s on a web site, like textual content, pictures, movies, and another kind of file that’s accessible to the bots. Regardless of the format, content material is completely discovered by way of hyperlinks.

Significance of crawling

Earlier than a search engine can index a website, it must crawl it. Crawling entails navigating by way of net pages, following hyperlinks, and discovering new content material. For web site homeowners and entrepreneurs, understanding what influences this crawling process can considerably affect their search engine rankings.

What’s high-quality content material?

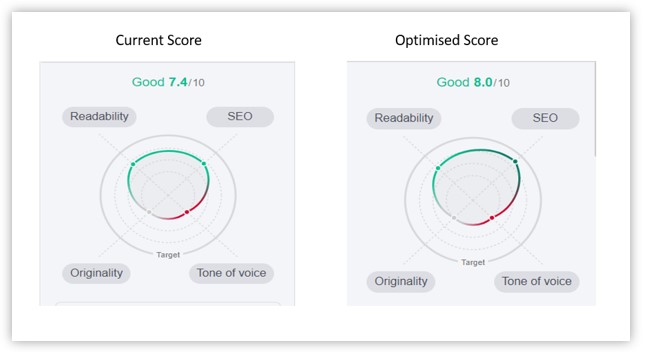

It’s no shock that in order for you your web site to crawl extra, that you must create high-quality content material. Each search engine has designed its algorithm in such a means that it’ll robotically prioritize the relevancy, authority, and worth of the content material. In easy phrases, every search engine prioritizes high-quality content. By any likelihood, if the search engine finds any flaw in your content material, they’ll begin searching for one other web site with higher content material high quality than your web site.

Earlier than you delve into the ocean of content material making in your web site, you need to discover out how to create good content, methods to lure customers to your web site, how to verify the person spends an increasing number of time in your web site, methods to make your web site adequate to be on the prime of search engine result pages (SERPs), an important factor methods to make the various search engines crawl your web site.

So, in order for you the solutions to the questions above, you might be on the proper place. On this weblog, you’ll get the reply to all your questions concerning web site crawling and the way high-quality content material might help your web site get crawled extra by serps.

Components that serps contemplate when crawling a web site

- Crawl price range:- Crawl price range is a subject that has been preoccupied by an enormous variety of SEO experts for years. The parable about crawl budgets which have been circulating within the digital marketing industry for years is that they restrict the variety of occasions a bot visits your web site, which impacts the indexing and rating of your web site negatively. However, in latest occasions, a revelation has emerged: within the majority of instances, the higher your content material high quality is, the upper it has an opportunity to be listed. This new facet dismisses the misunderstanding of the crawl price range and directs everybody’s consideration again to growing high-quality content material that each the viewers and serps discover useful.

-

- Contradicting the crawl price range fantasy

For a very long time, the definition of crawl budgets was that serps present a certain amount of visits to each web site. Should you waste all your money and energy on too many pages, individuals received’t view your contemporary materials. Due to this misunderstanding, many web site homeowners began contemplating crawl efficiency optimization over content material high quality.

-

- Google prioritizes crawls relying on demand and high quality

Google crawlers at all times prioritize crawls relying on demand and high quality; it’s evident as a result of the crawlers are good bots, not some dense droids. Nicely-maintained web sites with good content material will robotically obtain extra crawls than these with skinny and antiquated content material.

- Give attention to URL construction:- The job of an internet crawler is to find the URLs and obtain the content material that’s on the web site. Throughout this complete course of, they might ship the content material to the search engine index and extract hyperlinks to different net pages.

There’s a class wherein the extracted hyperlinks fall into:

-

- New URLs: Hyperlinks that aren’t acquainted to the search engine.

- Recognized URLs that present nothing to the search engine: These URLs can be reviewed sometimes to find out whether or not any modifications have been made to the web page’s content material.

- Recognized URLs which have been modified: It signifies that the URLs have been recrawled and reindexed, reminiscent of through an XML sitemap final mod date time stamp.

- Recognized URLs that haven’t been modified: These URLs is not going to be recrawled or reindexed.

- Verify web page indexability:- An essential facet of crawling is indexing. In easy phrases, indexing is storing and organizing the knowledge discovered on a web site. The bot despatched by serps renders the code on the location in the way in which a browser does. It registers all of the content material, hyperlinks, and metadata on a web site. Indexing calls for a substantial quantity of laptop assets, and it’s not simply storage. It takes a substantial variety of computing assets to render thousands and thousands of internet sites.

- High quality of content material:- So, what particularly do the various search engines contemplate as high-quality content? Contemplating that there isn’t any common reply, there are some elementary tenets that maintain some significance:

-

- Shopper-oriented: Are customers getting what they’re searching for out of your web site? Is your content material fixing a person’s question? Your content material ought to be enlightening, interesting, and fantastically laid out.

- Proficiency and Authority: Is your content material displaying a deep information of the subject that customers are searching for? Are you quoting respected and reliable sources and providing something distinctive to the person? Engines like google worth the content material made by licensed sources.

- Dependability: Can a person depend upon the precision and integrity of your content material? Engines like google pay a large amount of consideration to web sites with a stable popularity in the case of dependability.

- Freshness of content material: Are you usually updating your content material with new data and visions? Your dedication to keep your content fresh and related is one thing that serps fee very excessive.

- Prioritize high quality over amount:- It’s not one thing new; everybody is aware of that the standard of a factor holds extra weight than its amount. It cannot solely improve crawl frequency but in addition:

-

- Enchancment in search rating: The algorithms of serps desire web sites with persistently helpful data, leading to progress in search engine rankings.

-

- Improve person interplay: Content material of top of the range not solely retains customers engaged but in addition retains customers coming again for extra, a fantastic contribution to user metrics that serps take into accounts.

- Construct authority of your web site: With time, a stature for exceptional content material units out your web site as a certified supply, resulting in a dominant presence of your web site on the web.

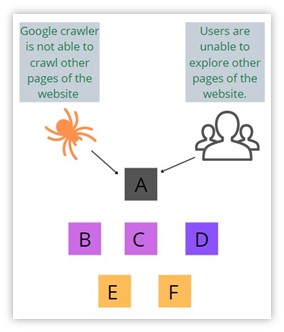

- Web site construction and navigation:- The construction and navigation of a website are essential in how successfully serps can crawl and index its content material.

-

- Internal linking: One essential facet that serps consider is a web site’s inner linking construction. Websites with clear, logical navigation and well-connected pages usually tend to be totally crawled. For instance, contemplate a weblog the place every publish hyperlinks to associated articles, making a community of interconnected content material that’s simpler for serps to find and index.

-

- Sitemap: Offering a sitemap can assist search engines in discovering and indexing content material effectively. A sitemap acts as a information of your web site, itemizing all of the essential pages and their relationships. By offering the sitemap of your web site to serps, you might be guaranteeing that every one your essential pages are crawled and listed promptly.

- Web site efficiency :- A web site’s efficiency instantly impacts its crawlability and person expertise.

-

- Web page pace: Engines like google desire faster-loading web sites as they improve person expertise. Use instruments like Google PageSpeed Insights to optimize your web site’s loading occasions by compressing pictures, minifying CSS and JavaScript, and leveraging browser caching. Improved web page pace not solely advantages user engagement but in addition encourages search engine crawlers to discover extra of your website effectively.

- Cellular-friendliness: With the expansion in cell search, having a versatile design that works effectively on all units is essential. Ensure that your web site is mobile-friendly through the use of versatile design rules and testing throughout numerous units and display screen sizes. Cellular-friendly web sites usually tend to be crawled and listed by mobile-first engines like Google.

- Technical issues:- Technical elements of a web site can affect how serps crawl and interpret its content material.

-

- Robots.txt: Correctly configured robots.txt information assist information search engine crawlers on which components of the location to crawl or keep away from. Use robots.txt directives to dam irrelevant or delicate content material from being listed, reminiscent of admin pages, inner scripts, or duplicate content material. Nevertheless, be cautious to not inadvertently block essential pages that you really want serps to crawl.

- URL Construction: Clear and descriptive Uniform Resource Locators (URLs) could make serps perceive your web site higher. Use concise, keyword-rich URLs that precisely replicate the content material of every web page. Keep away from lengthy, complicated URLs with pointless parameters or session IDs, as they will confuse search engine crawlers and affect indexing effectivity.

- Backlink profile:- Inbound hyperlinks from different web sites act as a powerful sign of a website’s authority and relevance.

-

- Inbound hyperlinks: Quality backlinks from respected websites are like assurance for serps {that a} web site is authoritative and reliable. Give attention to buying high-quality backlinks by way of content material advertising and marketing, guest blogging, and relationship constructing along with your trade. High quality backlinks not solely enhance your website’s crawlability but in addition play an essential position within the increased rating of your net web page in search engine result pages (SERPs).

- Web site safety:- Web site safety is more and more essential for serps and person belief.

-

- HTTPS: Web sites utilizing safe hypertext transfer protocol (HTTPS) connections are favored by serps over HTTP websites. Guarantee your web site security with a Secure Socket Layer (SSL) certification to encrypt knowledge transmitted between customers and your server. HTTPS web sites should not solely safer however may improve your web site rating in search engine algorithms, making them extra prone to be crawled and listed.

- Consumer expertise alerts:- User experience metrics can not directly affect how serps prioritize crawling and indexing.

-

- Bounce fee: A excessive bounce rate can act as a sign to serps that customers should not discovering your web site content material partaking or related. Reduce bounce rates by enhancing content material high quality, enhancing web page usability, and optimizing calls-to-action to maintain guests engaged and discover extra pages.

- Dwell Time: The amount of time users spend on a website generally is a constructive rating sign. Create partaking, informative content material that may make customers keep in your website longer. Use multimedia reminiscent of movies, infographics, and interactive options to reinforce person expertise and lengthen dwell time.

- Social media exercise:- Social media exercise can not directly affect search engine crawl charges and indexing frequency.

-

- Social sharing: Content material that’s shared and mentioned on social media platforms could obtain extra consideration from serps. Encourage social sharing by integrating social sharing buttons in your web site and always selling your content material on social media channels. Elevated social engagement can result in extra frequent crawling and indexing of your web site by serps.

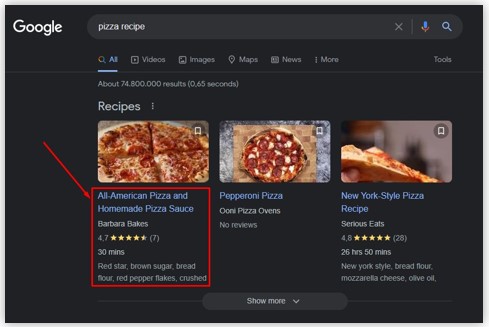

- Structured knowledge:- Implementing structured data markup can improve how serps comprehend and show your content material in search outcomes.

-

- Schema Markup: Use schema.org markup to present serps a complete quantity of details about your content material, reminiscent of product opinions, occasion particulars, recipe directions, and extra. By implementing structured knowledge, you possibly can increase the visibility and relevance of your content material in search engine outcomes, doubtlessly rising crawl charges for structured data-enhanced pages.

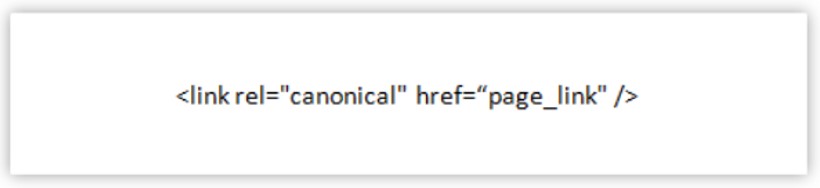

- Canonical tags:- Canonical tags assist serps establish the superior model of duplicate or comparable content material.

-

- Canonicalization: Use canonical tags to point the supply or most well-liked URL for content material that exists in a number of places in your web site. This manner the serps can keep away from indexing duplicate pages and strengthen rating alerts to the canonical model, optimizing crawl efficiency and indexing accuracy.

- Content material accessibility:- Be sure that your content material is accessible to each customers and serps.

-

- Accessibility requirements: Observe web accessibility guidelines to make your content material accessible to customers with disabilities. Engines like google prioritize user-friendly web sites, so enhancing accessibility can not directly improve crawlability and indexing by enhancing total person expertise.

- Content material engagement metrics:- Analyzing user engagement metrics can present essential insights into content material efficiency and crawl priorities.

-

- Analytics integration: Use instruments like Google Analytics to maintain a eye on person engagement metrics like time on web page, scroll depth, and conversion charges. Establish high-performing content material that pulls person engagement and prioritize it for frequent crawling and indexing by serps.

- Hyperlink fairness distribution:- Optimize the distribution of hyperlink fairness throughout your web site to make sure that essential pages obtain enough rating alerts.

- Dynamic rendering:- Dynamic rendering techniques optimize web site content material supply for search engine crawlers.

-

- JavaScript SEO: Implement dynamic rendering to serve pre-rendered HTML content material to go looking engine crawlers, guaranteeing complete indexing of JavaScript-heavy web sites. There are completely different instruments that you should use to facilitate environment friendly dynamic rendering for improved crawlability.

- XML Sitemaps:- Optimizing XML sitemap aids serps in discovering and indexing essential web site content material.

-

- Sitemap optimization: Often replace and optimize XML sitemaps to incorporate all crucial URLs, prioritize essential pages, and submit them to serps through Google Search Console. XML sitemaps provides serps a roadmap to crawl and index important content material effectively.

- Crawlability testing:- Conducting common crawlability assessments identifies and resolves technical points that hinder web site indexing.

Conclusion

In conclusion, understanding the complicated processes of crawling and indexing is very important for anybody concerned in website positioning or web site optimization. It’s worthwhile to concentrate on essential components that affect crawling, reminiscent of crawl price range, high-quality content material, and person expertise. Opposite to the misunderstanding surrounding crawl budgets limiting crawls, emphasis is now positioned on content material high quality, relevance, and person satisfaction.

Creating high-quality content material stays a cornerstone of profitable crawling and indexing methods. Engines like google prioritize content material that’s client-oriented, authoritative, dependable, and usually up to date. High quality content material not solely enhances crawl frequency but in addition improves search rankings, person engagement, and web site authority over time.

Moreover, technical elements like site structure, efficiency, safety, and accessibility play very important roles in facilitating environment friendly crawling and indexing. Inside linking, sitemaps, schema markup, canonical tags, and dynamic rendering strategies additional optimize crawlability and improve content material visibility in search outcomes.

Common monitoring of analytics, conducting crawlability assessments, and adapting to evolving search engine algorithms are important for sustaining optimum crawling efficiency. By prioritizing high quality content material creation, technical optimization, and user-centric design, web site homeowners can make sure that their websites are crawled effectively and listed precisely, finally enhancing their visibility and search engine rankings.