On the earth of SEO, URL parameters pose a major drawback.

Whereas builders and information analysts might recognize their utility, these question strings are an Web optimization headache.

Numerous parameter combos can break up a single consumer intent throughout hundreds of URL variations. This will trigger problems for crawling, indexing, visibility and, finally, result in decrease site visitors.

The problem is we will’t merely want them away, which suggests it’s essential to grasp the right way to handle URL parameters in an Web optimization-friendly approach.

To take action, we are going to discover:

What Are URL Parameters?

URL parameters, also referred to as question strings or URI variables, are the portion of a URL that follows the ‘?’ image. They’re comprised of a key and a price pair, separated by an ‘=’ signal. A number of parameters might be added to a single web page when separated by an ‘&’.

The most typical use instances for parameters are:

- Monitoring – For instance ?utm_medium=social, ?sessionid=123 or ?affiliateid=abc

- Reordering – For instance ?kind=lowest-price, ?order=highest-rated or ?so=newest

- Filtering – For instance ?kind=widget, color=purple or ?price-range=20-50

- Figuring out – For instance ?product=small-purple-widget, categoryid=124 or itemid=24AU

- Paginating – For instance, ?web page=2, ?p=2 or viewItems=10-30

- Looking – For instance, ?question=users-query, ?q=users-query or ?search=drop-down-option

- Translating – For instance, ?lang=fr or ?language=de

Web optimization Points With URL Parameters

1. Parameters Create Duplicate Content material

Usually, URL parameters make no vital change to the content material of a web page.

A re-ordered model of the web page is usually not so totally different from the unique. A web page URL with monitoring tags or a session ID is an identical to the unique.

For instance, the next URLs would all return a set of widgets.

- Static URL: https://www.instance.com/widgets

- Monitoring parameter: https://www.instance.com/widgets?sessionID=32764

- Reordering parameter: https://www.instance.com/widgets?kind=newest

- Figuring out parameter: https://www.instance.com?class=widgets

- Looking parameter: https://www.instance.com/merchandise?search=widget

That’s fairly a couple of URLs for what’s successfully the identical content material – now think about this over each class in your website. It may possibly actually add up.

The problem is that serps deal with each parameter-based URL as a brand new web page. So, they see a number of variations of the identical web page, all serving duplicate content material and all concentrating on the identical search intent or semantic subject.

Whereas such duplication is unlikely to trigger a web site to be utterly filtered out of the search outcomes, it does result in keyword cannibalization and will downgrade Google’s view of your total website high quality, as these further URLs add no actual worth.

2. Parameters Scale back Crawl Efficacy

Crawling redundant parameter pages distracts Googlebot, lowering your website’s capability to index Web optimization-relevant pages and rising server load.

Google sums up this level completely.

“Overly advanced URLs, particularly these containing a number of parameters, may cause a issues for crawlers by creating unnecessarily excessive numbers of URLs that time to an identical or related content material in your website.

Because of this, Googlebot might eat way more bandwidth than vital, or could also be unable to utterly index all of the content material in your website.”

3. Parameters Cut up Web page Rating Indicators

You probably have a number of permutations of the identical web page content material, hyperlinks and social shares could also be coming in on varied variations.

This dilutes your rating indicators. If you confuse a crawler, it turns into not sure which of the competing pages to index for the search question.

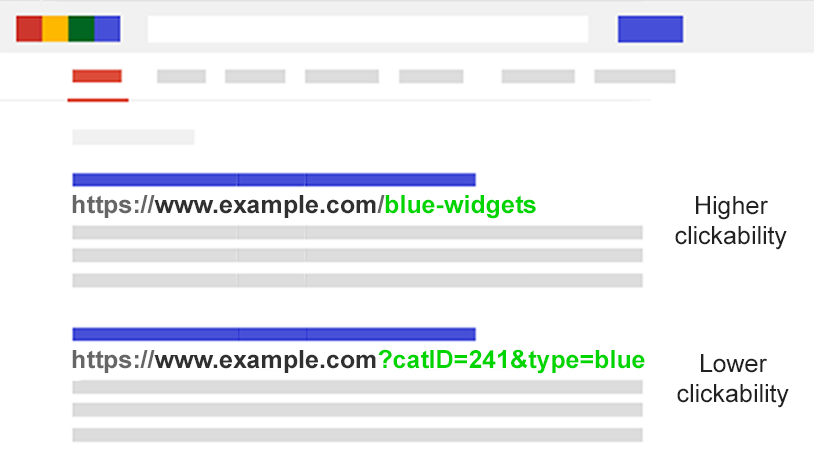

4. Parameters Make URLs Much less Clickable

Picture created by creator

Picture created by creatorLet’s face it: parameter URLs are ugly. They’re laborious to learn. They don’t appear as reliable. As such, they’re barely much less prone to be clicked.

This will influence web page efficiency. Not solely as a result of CTR influences rankings, but additionally as a result of it’s much less clickable in AI chatbots, social media, in emails, when copy-pasted into boards, or wherever else the total URL could also be displayed.

Whereas this may occasionally solely have a fractional influence on a single web page’s amplification, each tweet, like, share, e mail, hyperlink, and point out issues for the area.

Poor URL readability might contribute to a lower in model engagement.

Assess The Extent Of Your Parameter Drawback

It’s essential to know each parameter used in your web site. However likelihood is your builders don’t preserve an up-to-date checklist.

So how do you discover all of the parameters that want dealing with? Or perceive how search engines crawl and index such pages? Know the worth they bring about to customers?

Observe these 5 steps:

- Run a crawler: With a device like Screaming Frog, you may seek for “?” within the URL.

- Overview your log recordsdata: See if Googlebot is crawling parameter-based URLs.

- Look within the Google Search Console web page indexing report: Within the samples of index and related non-indexed exclusions, seek for ‘?’ within the URL.

- Search with website: inurl: superior operators: Know the way Google is indexing the parameters you discovered by placing the important thing in a website:instance.com inurl:key mixture question.

- Look in Google Analytics all pages report: Seek for “?” to see how every of the parameters you discovered are utilized by customers. Be sure you test that URL question parameters haven’t been excluded within the view setting.

Armed with this information, now you can determine the right way to greatest deal with every of your web site’s parameters.

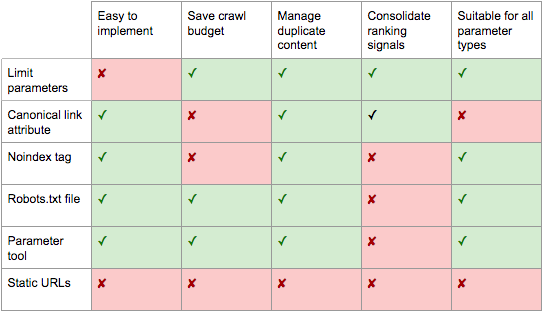

Web optimization Options To Tame URL Parameters

You might have six instruments in your Web optimization arsenal to take care of URL parameters on a strategic stage.

Restrict Parameter-based URLs

A easy assessment of how and why parameters are generated can present an Web optimization fast win.

You’ll usually discover methods to cut back the variety of parameter URLs and thus reduce the unfavorable Web optimization influence. There are 4 widespread points to start your assessment.

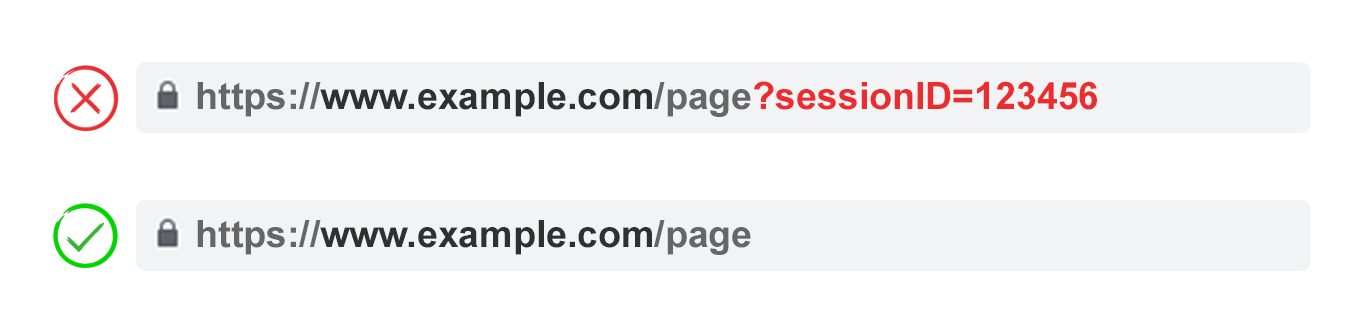

1. Eradicate Pointless Parameters

Picture created by creator

Picture created by creatorAsk your developer for an inventory of each web site’s parameters and their capabilities. Chances are high, you’ll uncover parameters that not carry out a priceless operate.

For instance, customers might be higher recognized by cookies than sessionIDs. But the sessionID parameter should still exist in your web site because it was used traditionally.

Or it’s possible you’ll uncover {that a} filter in your faceted navigation isn’t utilized by your customers.

Any parameters brought on by technical debt ought to be eradicated instantly.

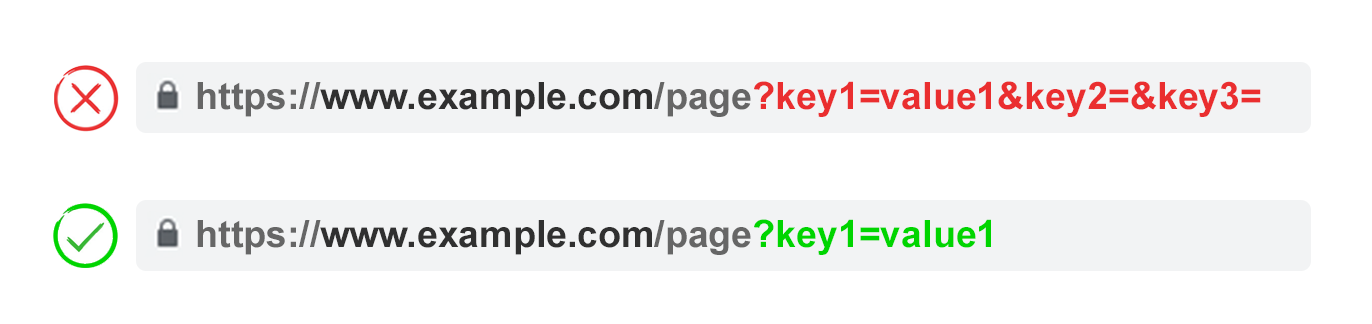

2. Stop Empty Values

Picture created by creator

Picture created by creatorURL parameters ought to be added to a URL solely once they have a operate. Don’t allow parameter keys to be added if the worth is clean.

Within the above instance, key2 and key3 add no worth, each actually and figuratively.

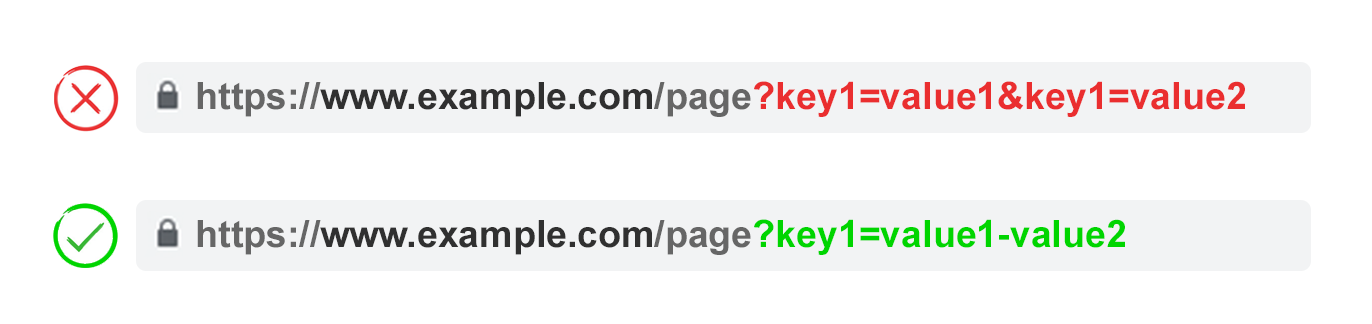

3. Use Keys Solely As soon as

Picture created by creator

Picture created by creatorKeep away from making use of a number of parameters with the identical parameter title and a special worth.

For multi-select choices, it’s higher to mix the values after a single key.

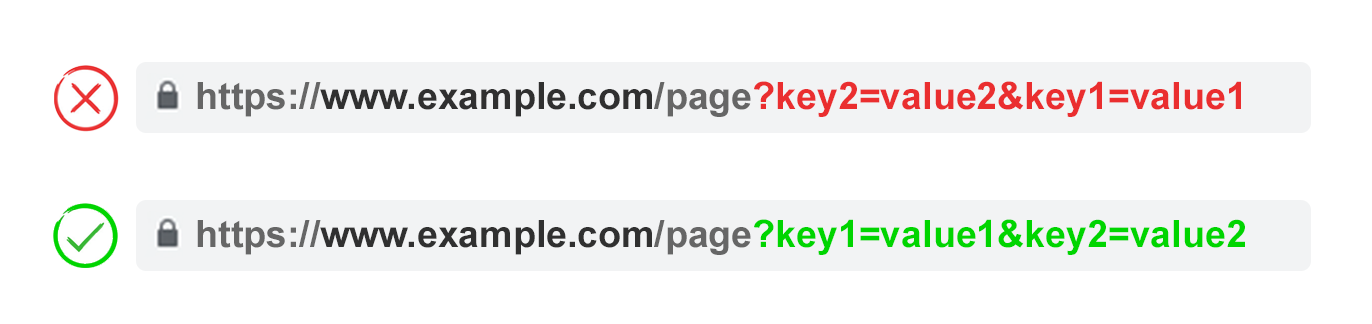

4. Order URL Parameters

Picture created by creator

Picture created by creatorIf the identical URL parameter is rearranged, the pages are interpreted by serps as equal.

As such, parameter order doesn’t matter from a replica content material perspective. However every of these combos burns crawl funds and break up rating indicators.

Keep away from these points by asking your developer to put in writing a script to all the time place parameters in a constant order, no matter how the consumer chosen them.

In my view, you must begin with any translating parameters, adopted by figuring out, then pagination, then layering on filtering and reordering or search parameters, and at last monitoring.

Professionals:

- Ensures extra environment friendly crawling.

- Reduces duplicate content material points.

- Consolidates rating indicators to fewer pages.

- Appropriate for all parameter sorts.

Cons:

- Average technical implementation time.

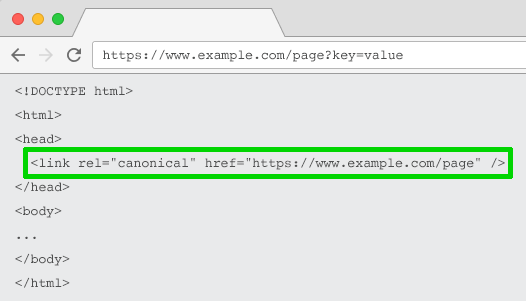

Rel=”Canonical” Hyperlink Attribute

Picture created by creator

Picture created by creatorThe rel=”canonical” hyperlink attribute calls out {that a} web page has an identical or related content material to a different. This encourages serps to consolidate the rating indicators to the URL specified as canonical.

You may rel=canonical your parameter-based URLs to your Web optimization-friendly URL for monitoring, figuring out, or reordering parameters.

However this tactic is just not appropriate when the parameter web page content material is just not shut sufficient to the canonical, corresponding to pagination, looking out, translating, or some filtering parameters.

Professionals:

- Comparatively simple technical implementation.

- Very prone to safeguard in opposition to duplicate content material points.

- Consolidates rating indicators to the canonical URL.

Cons:

- Wastes crawling on parameter pages.

- Not appropriate for all parameter sorts.

- Interpreted by serps as a robust trace, not a directive.

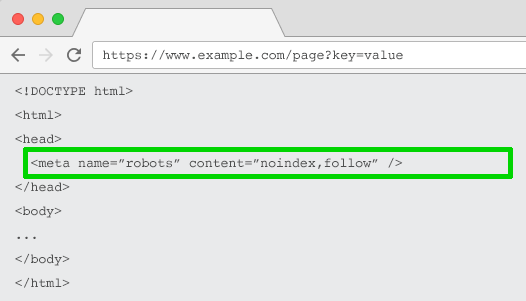

Meta Robots Noindex Tag

Picture created by creator

Picture created by creatorSet a noindex directive for any parameter-based web page that doesn’t add Web optimization worth. This tag will stop serps from indexing the web page.

URLs with a “noindex” tag are additionally prone to be crawled much less regularly and if it’s current for a very long time will finally lead Google to nofollow the page’s links.

Professionals:

- Comparatively simple technical implementation.

- Very prone to safeguard in opposition to duplicate content material points.

- Appropriate for all parameter sorts you don’t want to be listed.

- Removes current parameter-based URLs from the index.

Cons:

- Received’t stop serps from crawling URLs, however will encourage them to take action much less regularly.

- Doesn’t consolidate rating indicators.

- Interpreted by serps as a robust trace, not a directive.

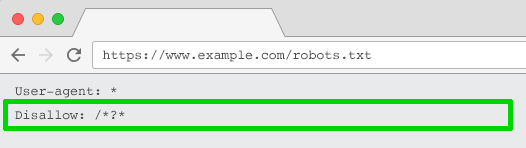

Robots.txt Disallow

Picture created by creator

Picture created by creatorThe robots.txt file is what serps have a look at first earlier than crawling your website. In the event that they see one thing is disallowed, they received’t even go there.

You should use this file to dam crawler entry to each parameter primarily based URL (with Disallow: /*?*) or solely to particular question strings you don’t need to be listed.

Professionals:

- Easy technical implementation.

- Permits extra environment friendly crawling.

- Avoids duplicate content material points.

- Appropriate for all parameter sorts you don’t want to be crawled.

Cons:

- Doesn’t consolidate rating indicators.

- Doesn’t take away current URLs from the index.

Transfer From Dynamic To Static URLs

Many individuals suppose the optimum option to deal with URL parameters is to easily keep away from them within the first place.

In spite of everything, subfolders surpass parameters to assist Google perceive website construction and static, keyword-based URLs have all the time been a cornerstone of on-page Web optimization.

To attain this, you need to use server-side URL rewrites to transform parameters into subfolder URLs.

For instance, the URL:

www.instance.com/view-product?id=482794

Would turn into:

www.instance.com/widgets/purple

This strategy works properly for descriptive keyword-based parameters, corresponding to those who establish classes, merchandise, or filters for search engine-relevant attributes. Additionally it is efficient for translated content material.

Nevertheless it turns into problematic for non-keyword-relevant parts of faceted navigation, corresponding to an actual value. Having such a filter as a static, indexable URL provides no Web optimization worth.

It’s additionally a difficulty for looking out parameters, as each user-generated question would create a static web page that vies for rating in opposition to the canonical – or worse presents to crawlers low-quality content material pages every time a consumer has looked for an merchandise you don’t provide.

It’s considerably odd when utilized to pagination (though not unusual as a consequence of WordPress), which might give a URL corresponding to

www.instance.com/widgets/purple/page2

Very odd for reordering, which might give a URL corresponding to

www.instance.com/widgets/purple/lowest-price

And is usually not a viable choice for monitoring. Google Analytics won’t acknowledge a static model of the UTM parameter.

Extra to the purpose: Changing dynamic parameters with static URLs for issues like pagination, on-site search field outcomes, or sorting doesn’t handle duplicate content material, crawl funds, or inner hyperlink fairness dilution.

Having all of the combos of filters out of your faceted navigation as indexable URLs usually ends in thin content points. Particularly in case you provide multi-select filters.

Many Web optimization execs argue it’s attainable to offer the identical consumer expertise with out impacting the URL. For instance, through the use of POST relatively than GET requests to change the web page content material. Thus, preserving the consumer expertise and avoiding Web optimization issues.

However stripping out parameters on this method would take away the likelihood on your viewers to bookmark or share a hyperlink to that particular web page – and is clearly not possible for monitoring parameters and never optimum for pagination.

The crux of the matter is that for a lot of web sites, utterly avoiding parameters is just not attainable if you wish to present the best consumer expertise. Nor wouldn’t it be greatest follow Web optimization.

So we’re left with this. For parameters that you just don’t need to be listed in search outcomes (paginating, reordering, monitoring, and so forth) implement them as question strings. For parameters that you just do need to be listed, use static URL paths.

Professionals:

- Shifts crawler focus from parameter-based to static URLs which have a better chance to rank.

Cons:

- Important funding of growth time for URL rewrites and 301 redirects.

- Doesn’t stop duplicate content material points.

- Doesn’t consolidate rating indicators.

- Not appropriate for all parameter sorts.

- Might result in skinny content material points.

- Doesn’t all the time present a linkable or bookmarkable URL.

Greatest Practices For URL Parameter Dealing with For Web optimization

So which of those six Web optimization techniques must you implement?

The reply can’t be all of them.

Not solely would that create pointless complexity, however usually, the Web optimization options actively battle with each other.

For instance, in case you implement robots.txt disallow, Google wouldn’t be capable to see any meta noindex tags. You additionally shouldn’t combine a meta noindex tag with a rel=canonical hyperlink attribute.

Google’s John Mueller, Gary Ilyes, and Lizzi Sassman couldn’t even determine on an strategy. In a Search Off The Record episode, they mentioned the challenges that parameters current for crawling.

They even counsel bringing again a parameter dealing with device in Google Search Console. Google, if you’re studying this, please do deliver it again!

What turns into clear is there isn’t one excellent resolution. There are events when crawling effectivity is extra essential than consolidating authority indicators.

Finally, what’s proper on your web site will rely in your priorities.

Picture created by creator

Picture created by creatorPersonally, I take the next plan of assault for Web optimization-friendly parameter dealing with:

- Analysis consumer intents to grasp what parameters ought to be search engine pleasant, static URLs.

- Implement effective pagination handling utilizing a ?web page= parameter.

- For all remaining parameter-based URLs, block crawling with a robots.txt disallow and add a noindex tag as backup.

- Double-check that no parameter-based URLs are being submitted within the XML sitemap.

It doesn’t matter what parameter dealing with technique you select to implement, remember to document the impact of your efforts on KPIs.

Extra sources:

Featured Picture: BestForBest/Shutterstock